Simultaneous Localization and Mapping (SLAM)

Simultaneous Localization and Mapping (SLAM)

Artificial intelligence methods (esp. deep learning) have made great progress over classical methods in the field of camera and LiDAR data processing. The major advantage is that the neural networks used can be trained with only a set of input data (e.g., images) and the desired output data (e.g., motion estimation) without having to manually design special algorithms for the problem. Through this so-called “end-to-end” training, the internal network parameters are learned automatically so that an optimal result is obtained.

The figure below shows the architecture of a deep neural network that estimates camera motion (right “pose”) from a sequence of images (left) [1]. Here, it consists of a convolutional network (CNN, yellow) that estimates relative motion from two consecutive images, and a recurrent network (RNN) that learns the motion dynamics and infers the relative motion (pose). RNNs can process sequences (e.g., speech, text) and can learn the timing of the input data through their internal “memory.” The figure below shows the estimated trajectory of a car trip (in red).

Camera, as well as LiDAR data, are used for positioning as well as 3D map generation. Furthermore, these data can be used to create digital HD maps. These contain annotations of static objects, which are georeferenced. These digital maps can also be used to support the position solution.

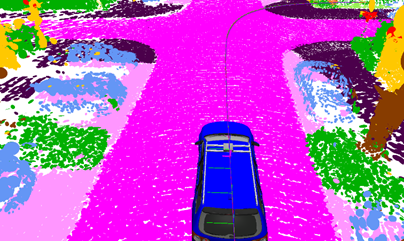

In addition to motion estimation, AI methods are also used for semantic segmentation. Here, a pre-trained neural network [2] assigns each point of a 3D point cloud (of a LiDAR scan) to an object class, e.g. road or car (figure below). Furthermore, this important scene information is used to stabilize motion estimation and optimize map generation [2].

The two visual sensors, camera and LiDAR, contribute to the function monitoring of the complete multi-sensor positioning through their information derived with the help of AI, such as position and map. An important factor here is that LiDAR and camera can provide a position solution independent of GNSS reception and thus cannot be interfered with by jamming/spoofing. The function monitoring itself is also supported by AI-based methods.

References:

[1] S. Wang, R. Clark, H. Wen, and N. Trigoni, “DeepVO: Towards end to-end visual odometry with deep recurrent convolutional neural networks,” IEEE International Conference on Robotics and Automation (ICRA), 2017

[2] X. Chen, A. Milioto, E. Palazzolo, P. Giguère, J. Behley and C. Stachniss, “SuMa++: Efficient LiDAR-based Semantic SLAM”, IEEE/RSJ International Conference on Intelligent Robots and System (IROS), 2019

[3] A. Geiger, P. Lenz and R. Urtasun. “Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite”, IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2012

SLAM: Providing High Accuracy in Challenging Environment

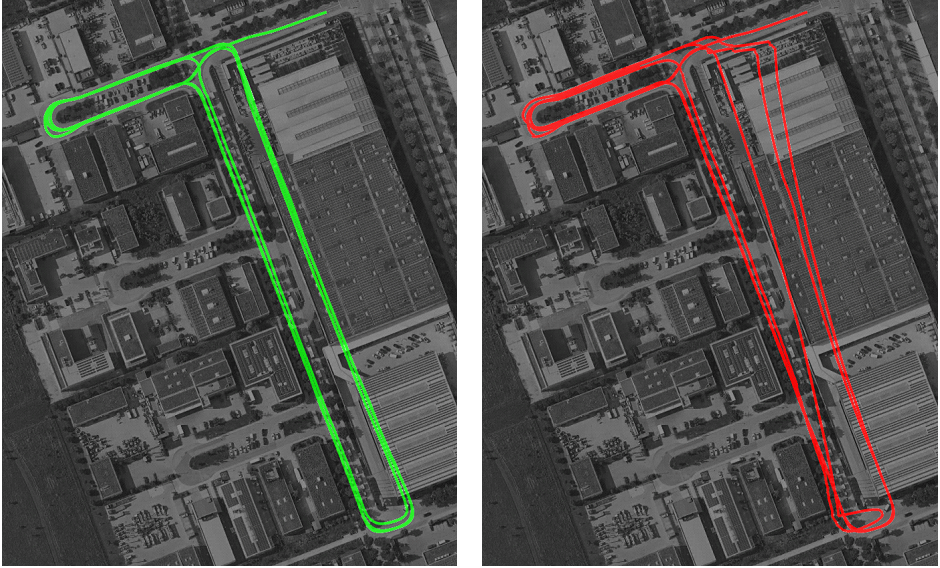

A main objective using vision sensors (e.g. LiDAR) is to provide an accurate position even in challenging environment. This is achieved in the following test-campaign with multi-sensor fusion of GNSS with IMU, Lidar, camera and odometry. It is possible to increase the PVT solution accuracy in situations where no GNSS signal reception was possible. The figure below shows a very challenging indoor/outdoor environment where the indoor environment is a fully roofed parking area. The test drive consists of entering and leaving the parking area multiple times leading to several indoor/outdoor transitions. Both, the green and red solution, are a multi-sensor solution consisting of GNSS, IMU and odometry data. The green solution is furthermore fused with a Lidar/camera. The red solution without the Lidar/camera shows a significant drift within the indoor environment due to the complete loss of GNSS signals. The fusion with IMU and odometry was not able to prevent this drift.

The Lidar/camera, however, provides an absolute position even in challenging situations. The fusion of this visual based position within the EKF makes the solution robust and accurate in situations with no GNSS reception.

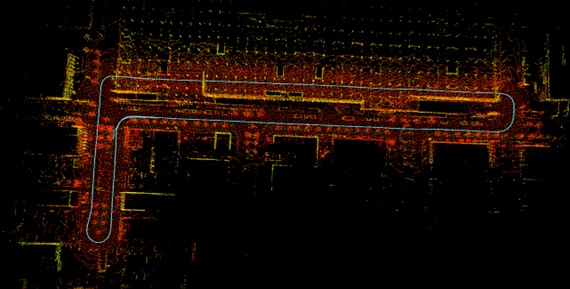

The corresponding Lidar 3D map and vehicle’s trajectory is shown in the next figure which was determined by LIDAR SLAM. The complete upper line of the trajectory from left to right is the indoor environment with no satellite visibility. The remaining parts of the track are roads outside with moderate to good satellite visibility.

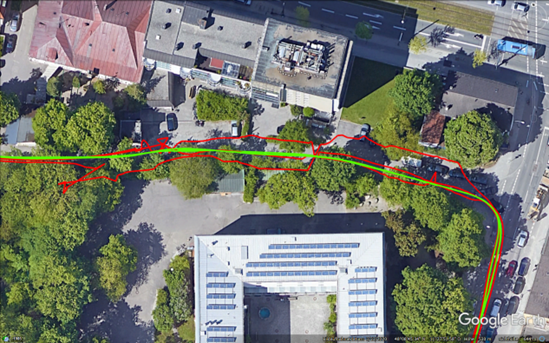

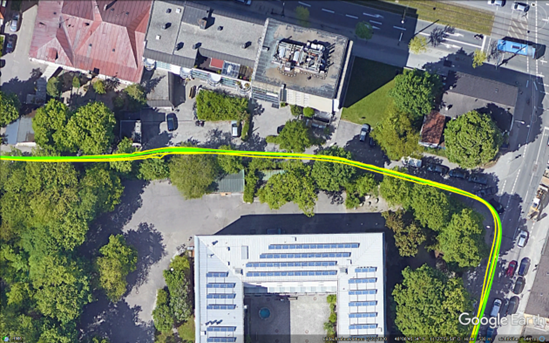

An additional example of a challenging part is a narrow street surrounded by trees and buildings shown in in the next figure. Multipath and signal shadowing leads to a low-quality signal reception.

Here, the multi-sensor fusion outperforms the classical Kalman filter solution, which is seen in the next figures.

Tightly Coupled Multi-Sensor Fusion Products with SLAM extension of ANavS®

ANavS® is offering the following product for multi-sensor RTK/PPP positioning with SLAM extension:

AI-ROX – The enhancement of the A-ROX system, with computer vision sensors including AI algorithmus.

A-ROX – GNSS-INS tightly coupled positioning system.

EmBox – Compact GNSS RTK and Heading Solution.

G-ROX – All-Frequency, all-Constellation RTK Reference Station.

Quick overview

Products

Equipped for every situation with the need of high-precise positioning & navigation:

AI-ROX, A-ROX, G-ROX, MSRTK, Snow Monitoring and M.2 SMART Card.

R&D Projects

Our R&D team is constantly working on researching, developing and implementing new technologies to master the challenges of tomorrow.

Publications

Our publications are varied, provide well-founded findings and present innovative solutions: Journal and Conference papers, Patents and Theses